RICE can be easily manipulated

I dislike RICE.

(Not the edible one which I enjoy in almost all cuisines.)

I am sure you have heard about this prioritization method and even applied it. Today, I will make the case why you should not use it and what is the alternative.

What is RICE?

RICE is a commonly used form of prioritization by product managers. I used it once and boy it was so easy to manipulate according to my own biases.

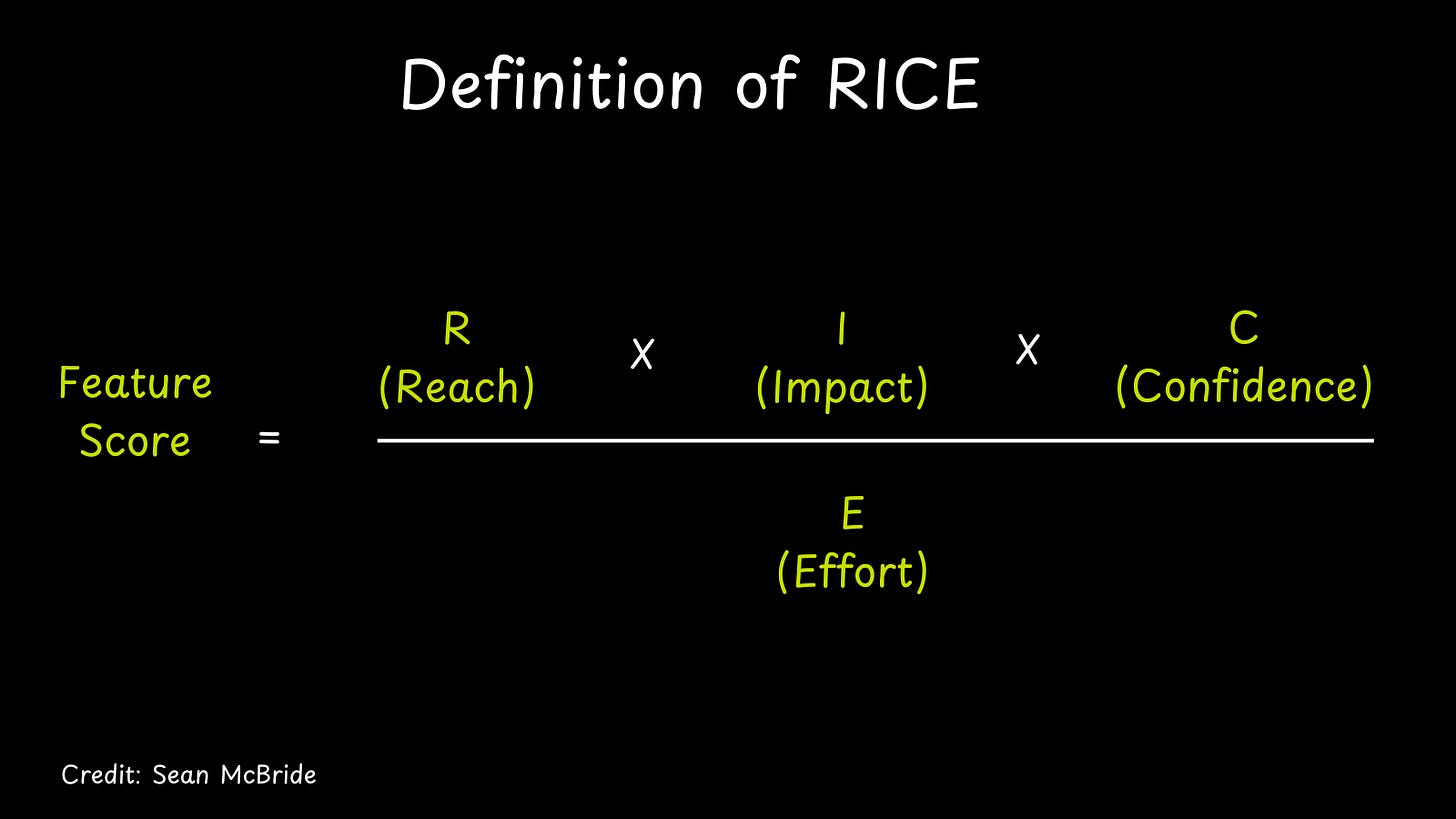

RICE is an acronym for Reach, Impact, Confidence, Effort.

If you have a laundry list of features to prioritize and need to rank order them, RICE is one such method. The idea is you give points for each feature for reach, impact, confidence and effort, and finally you rank stack them based on total score.

Let’s define how the method is used. This method was pioneered by Sean McBride at Intercom. I am 100% confident this process worked for them in their context. But just because it worked for them does not mean it will work in all other contexts.

When a framework or a method becomes popular then it it gets applied ad nauseam without regard to contexts. And if it means plugging in numbers, all the more better. After all, we love painting by numbers which is so much easier.

Imaging you have 100 features and bugs to prioritize.

You give each a feature score based on the above formula.

For each letter, you assign a numeric value.

R(each)

This letter is to assess the magnitude of the customer or user reach. How many users is this feature or a bug impacting. It can be a numeric number based on ARR, number of customers or users. What number should we give to small reach – 1, 2,5, 10. What number should we give to large reach – 100, 250, 1000?

There is no benchmark. It’s subjective. What if we just use a $ number like Customer ACV. Maybe that could work. But then you would favor features raised by some large whale customers because the ARR reach will seem huge.

I(mpact)

This letter is to assess the magnitude of the impact the said feature will have on your business – Higher revenue, lowers cost, reduce churn etc.

What number should we assign?

Who gets to decide high or low levels of impact?

More importantly, which impact is more important now. Is the product strategy to reduce churn or grow NRR or reduce cost? I hope you have some idea what you are building and the associate business impact you are hoping to achieve. If not, that by itself is a problem beyond the scope of this article.

C(onfidence)

This letter is to assess the confidence. This is extremely subjective.

If something is of high impact, wouldn’t you want to improve the confidence and get the necessary data or evidence. If I was told that a feature will improve retention rate by 5% but we are not sure of the feasibility, I will damn make sure we get that confidence.

So which confidence will you use to calculate the score. The earlier low one, or the latter high one.

E(ffort)

The denominator in the score calculation is effort. This can be Low, medium, High.

Let’s assume there is some standard for what is L,M,H effort say in terms of developer-hours. Most teams likely don’t have this standardized but I will just assume for the sake of argument. Now you have to give a number. Should we say 1,5,10 or 5,25,50? The choice of the scale has a huge impact on the final score.

Combine all the four numbers and you get a score for each item in your list.

Can you see you how easy they are to manipulate?

Want to include your pet feature ? Lower the scale for effort and increase the scale for confidence. You don’t have to lower the ranking per se, just the overall scale. For example, change the scale of effort from 5,50,100 to 1,3,8.

And see the numbers change.

My biggest peeve is you are basing priority based on effort. All those items that have low effort, will get high score. And you tend to prioritize these “Low hanging fruits” regardless of impact.

What if something is high effort but the impact is huge?

As product managers, it is our job to ensure we build those aspects of the product that provide maximum business impact.

So what is the alternative?

My preferred approach is impact based. For a more detailed explanation you can read my article here.

The conundrum of release planning.

Here is a short summary. These are the steps towards creating your near perfect release plan that is aligned to core business objectives.

Determine the core business priorities of the company

Make your candidate list across the three streams along with the impact of each

Select from the list that meet the business objectives

Assess the tradeoffs

Obtain buy in from management

This is not a perfect process but gets you started. At the very least it forces you to seriously consider the business impact as a criteria.

The big debates are not about how much to focus on new innovation vs improvements or fixes. Rather, the discussion is now focussed on which initiatives to select that has a better chance of meeting your key business objectives.

With this approach, you have aligned your objectives, you selected initiatives that are impactful, and everyone understands the trade offs.

Remember, this is not meant to be a mathematical or spreadsheet exercise. As you can see, you have to sometimes make a judgement call.