It's important to know the difference between complex and complicated, especially in the age of AI

Are Complex and Complicated synonyms?

Have you been in a situation where you are trying to explain some logic in your product to stakeholders or executives, or you are listening to an engineer explain something to you. You go through many different subs systems and try to connect them all.

But you lose track. Then you say, “OH, it’ complex? “

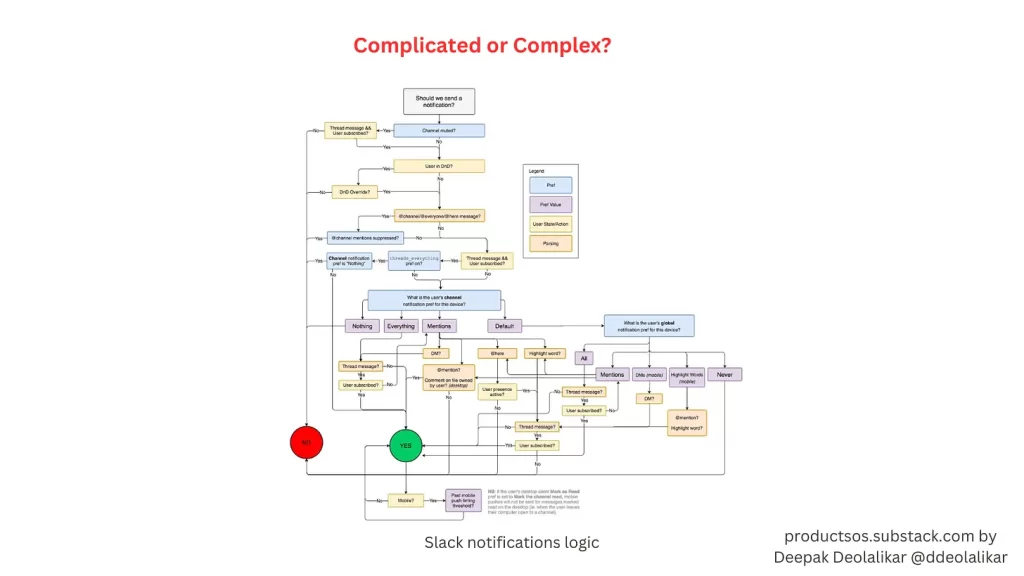

The below graphic is a slack notifications logic which has been going around on social for a while. What do you think?

Is it complex or complicated.

In most business lingo, complex and complicated are used interchangeably.

But there is a difference and it has impact on how we address newer solutions that use AI.

What’s the difference?

Complicated systems have a number of sub systems and all interrelated to each other. However, you can reliably know the output of a complicated system if you break it down into sub systems and processes and follow the component rules.

For example, a space rocket or an airplane engine is a complicated system. It has millions of sub systems all integrated and interdependent on each other. But the behavior of the rocket is entirely predictable. You can model every input – fuel, weather, weight, wind and predict the burn of fuel or acceleration.

It’s not easy. You will need a supercomputer to do the calculations. Well, because it is complicated. But every component can be predicted with precision.

Machines, computer programs are complicated systems but entirely predictable. Your social media feed, your slack notification algorithm, self driving cars are all predictable. You can reduce each of these systems and study or test them independently.

Note : Accidents happen but that’s because we humans have not accounted for every possible scenario. If you can predict that the outside temperature has an impact on the O ring, then they would add that to their system test. At the time of Challenger disaster, they just were not aware to account for the temperature impact. (Well technically, the scientists knew but the management disagreed, but thats a different story.)

But it was not a prediction problem.

Complex systems can also be complicated but there is one big difference. It’s hard to predict reliably the output based on a given input.

Human body is complex. You can’t tell for sure if a particular medicine will work the same way in different patients or even with you. If you are exposed to a virus, sometimes you may get sick, sometimes not. You can break down the body chemistry and physiology to it’s constituent parts and still not figure out why the outputs vary. That’s complex.

Economics, stock markets, interest rates, how your competitors or customers will respond are not predictable. You can assign probabilities. For example, if we add $$ of paid ads in our PLG motion, then more customers will convert. And you can certainly improve on the probabilities, but you still cannot accurately predict consistently.

So what has that got to do with us product managers.

It is important for you as a PM to understand the difference between complicated and complex.

Up until now, all our products were predictable. When I was at SugarCRM, our application was complicated with multiple modules, customization, role based access, team based permissions, long queries and so on. When we tried to explain or create test plans, we had to take a number of inputs and scenarios into considerations.

But at the end of the day, we could predict the system behavior based on the known inputs. We still had bugs because we had not accounted for all permutations of inputs.

So the onus was on us to make sure we account for all possible scenarios of use cases and test cases. The final product would predictably behave the way we intended it to.

Enter AI.

Over the last couple of years, AI has gained significant strength in all aspects of product development. Every feature now has some element of AI.

The challenge is AI is non deterministic.

Take ChatGPT.

If you ask the same prompt a few times, you will get different answers. As a PM, you are delivering an AI solution to a customer. How do you make sure the answers your AI will deliver are acceptable. It’s not a like data query which you can model accurately.

What about AI image or video generation ? In addition to accuracy, the taste, style will vary and be very subjective.

Why is there variability in AI outputs is beyond the scope of this article.

The point is that we will need to set different boundaries on what outputs are acceptable or not.

Let’s consider the scenario of developing an AI system that generates text recommendations. Given the inherent unpredictability of user interactions, it becomes challenging to ascertain what constitutes acceptable content.

But what you should do is define what is acceptable. Set up some norms for acceptable text and ensure users don’t see something unacceptable.

Achieving this goal entails a thorough examination of several key aspects:

Ensuring Unbiased AI Models: It is paramount to validate that AI outputs adhere to the established norms within your domain. Preventing the dissemination of incorrect language, particularly concerning sensitive topics like race and gender, is crucial. However, achieving this requires ongoing efforts, as the field of bias mitigation in AI is continuously evolving.

Refining Prompting Precision: Precision in prompting is essential to filter out undesirable outputs effectively. Engineering prompts with greater specificity can aid in this process. Iteratively refining prompts based on insights gained from initial model iterations helps ascertain the AI model’s capabilities and limitations, enabling more effective control over generated content.

Comprehensive Testing Parameters: Crafting test parameters encompassing a spectrum of acceptable and unacceptable outputs is essential. QA poses a significant challenge due to the inability to devise deterministic test plans. Nevertheless, continuous testing is crucial to gauge the AI’s performance and ensure a trajectory toward an expanding range of acceptable outputs.

Furthermore, with advancements in AI systems, we will see more and more accurate outputs but until then we will need to monitor what our users are receiving as AI output. Keep monitoring your users output and tweak the prompts and your models on a continuous basis.

Conclusion

As a PM, it is critical to distinguish if a system you are designing is complex or complicated. Your design, logic and test patterns will vary.

For complex systems, have a spectrum of what is acceptable and what’s not from your AI models.